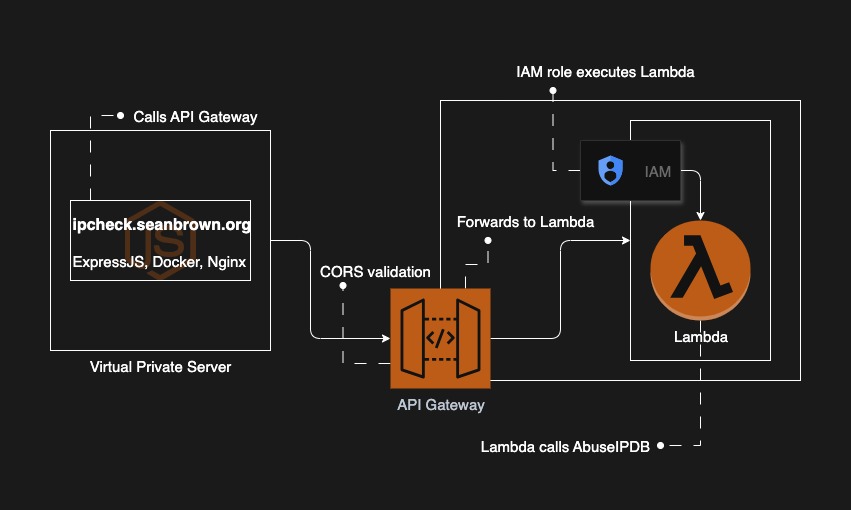

IPCheck is a microservice that uses AbuseIPDB to gather publicly-available information on a visitor’s IP address. I built it using AWS architecture, ExpressJS, Python, Nginx, and Docker. I host it here, and the repo is found here. One note: the ellipses in code blocks imply that other code exists, but is not necessary to display. Before the breakdown, see the diagram for a broad overview.

Infrastructure — OpenTofu

While building out the infrastructure, I used OpenTofu, which is an open source fork of Terraform, maintained under the Linux Foundation, after Hashicorp’s license change a couple years ago.

Secrets

You’ll notice there’s no variables.tf nor terraform.tfvars files in the repo. This is deliberate. Instead, I ran the following aws systems management command locally:

aws ssm put-parameter --name /prod/ipdb/api-key --type SecureString --overwrite --value '<AbuseIPDB API Key Here>'Following this, I access the secret as a data source with the following block from my lambda.tf file:

data "aws_ssm_parameter" "ipdb" {

name = "/prod/ipdb/api-key"

with_decryption = false

}I then use the secret as an environment variable within the lambda function, also in my lambda.tf file:

environment {

variables = {

IPDB = data.aws_ssm_parameter.ipdb.name

}

}The python code in the lambda function can now access the secret.

IAM Policy

I wrote the below python code to get the encrypted IPDB environment variable and decrypt it. Later, I use it to make the AbuseIPDB api call:

ssm = boto3.client("ssm")

param_name = os.environ["IPDB"]

resp = ssm.get_parameter(Name=param_name, WithDecryption=True)

ipdb_key = resp["Parameter"]["Value"]To make this ssm.get_parameter call, I give the IAM role permission to get the parameter, and also to decrypt it. In the iam.tf file, I set those permissions in the policy document:

data "aws_iam_policy_document" "allow_get_ipdb_param" {

statement {

actions = ["ssm:GetParameter"] # <- get permission

resources = [data.aws_ssm_parameter.ipdb.arn]

}

...

statement {

actions = ["kms:Decrypt"] # <- decrypt permission

...

}Lambda Function

For the lambda function I use a python module called ipdb_call.py. As you can see in the module, there is a dependency, httpx, not included in the python standard library:

import asyncio

import json

import os

import boto3

import httpxThere’s a requirements.txt file that I use to install the dependencies. boto3 is also not included, but is included in the AWS python runtime, therefore I don’t include it in the requirements file. To properly prepare the Lambda, I run the following commands, from the project root:

# create an s3 bucket

aws s3api create-bucket --bucket=python-ipdb --region=us-east-1

# create a zip file of the build

mkdir -p build

pip install -r requirements.txt -t ./build

cp ipdb_call.py ./build/

cd build

zip -r ../<zip_filename.zip> . #<- zip the contents of ./build!

cd ..

# push the zip into the s3 bucket

aws s3 cp <zip_filename.zip> s3://python-ipdb/v1.0.0/<zip_filename.zipIn the lambda function resource, you can see the references to the recently-created s3 bucket, with the bucket name itself, the key (subdirectory), and the zip file too:

resource "aws_lambda_function" "ipdb" {

function_name = "ipabuse_check"

s3_bucket = "python-ipdb"

s3_key = "v1.0.0/ipdb_003.zip"

handler = "ipdb_call.main"

runtime = "python3.12"

source_code_hash = filebase64sha256("../ipdb_003.zip")

role = aws_iam_role.lambda_exc.arn

...

}API Gateway — Integration

In the main.tf file I establish an API Gateway resource, with CORS configuration to permit calls only from my subdomain:

resource "aws_apigatewayv2_api" "http_api" {

name = "ipdb-http-api"

protocol_type = "HTTP"

cors_configuration {

allow_origins = ["https://ipcheck.seanbrown.org"]

allow_methods = ["GET"]

...

}It’s worth noting here that somebody could still curl my API Gateway url, if they were to get their hands on it.

I add API Gateway integration, allowing it to invoke the lambda function:

resource "aws_apigatewayv2_integration" "ipdb" {

api_id = aws_apigatewayv2_api.http_api.id

integration_type = "AWS_PROXY"

integration_method = "POST"

integration_uri = aws_lambda_function.ipdb.invoke_arn

...

}VPS — Secrets Management

A simple docker-compose.yml uses two text files to create secrets at runtime, so as not to leak them:

services:

app:

image: ipcheck:latest

ports:

- "3000:3000"

secrets:

- referrer

- gateway

environment:

REFERRER: /run/secrets/referrer

GATEWAY: /run/secrets/gateway

secrets:

referrer:

file: ./referrer.txt

gateway:

file: ./gateway.txtThen, in the Express app server.js file, I call the GATEWAY environment variable to successfully make the call to the API Gateway:

const gatewayPath = process.env.GATEWAY;

...

const GATEWAY = new URL(gatewayBase);

app.get('/data', async (req, res) => {

...

try {

const ip = `${req.ip}`;

const resp = await fetch(`${GATEWAY}${ip}`);

...

}

});VPS — Nginx

Finally, I use a reverse proxy in the Nginx configuration, to serve the container at port 3000 to the URL, which is also the origin url set in the CORS configuration for the API Gateway:

server {

listen 443 ssl http2;

server_name ipcheck.seanbrown.org;

...

location / {

proxy_pass http://localhost:3000/;

...

}

}